Coalition of researchers calls for more funding to study the boundary between conscious and unconscious systems.

Mariana Lenharo

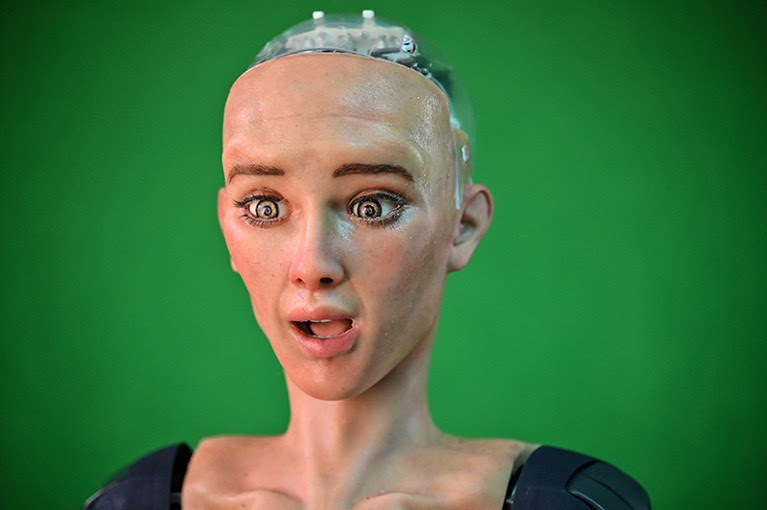

A standard method to assess whether machines are conscious has not yet been devised.Credit: Peter Parks/AFP via Getty

Could artificial intelligence (AI) systems become conscious? A coalition of consciousness scientists says that, at the moment, no one knows — and it is expressing concern about the lack of inquiry into the question.

In comments to the United Nations, members of the Association for Mathematical Consciousness Science (AMCS) call for more funding to support research on consciousness and AI. They say that scientific investigations of the boundaries between conscious and unconscious systems are urgently needed, and they cite ethical, legal and safety issues that make it crucial to understand AI consciousness. For example, if AI develops consciousness, should people be allowed to simply switch it off after use?

Such concerns have been mostly absent from recent discussions about AI safety, such as the high-profile AI Safety Summit in the United Kingdom, says AMCS board member Jonathan Mason, a mathematician based in Oxford, UK. Nor did US President Joe Biden’s executive order seeking responsible development of AI technology address issues raised by conscious AI systems.

“With everything that’s going on in AI, inevitably there’s going to be other adjacent areas of science which are going to need to catch up,” Mason says. Consciousness is one of them.

Not science fiction

It is unknown to science whether there are, or will ever be, conscious AI systems. Even knowing whether one has been developed would be a challenge, because researchers have yet to create scientifically validated methods to assess consciousness in machines, Mason says. “Our uncertainty about AI consciousness is one of many things about AI that should worry us, given the pace of progress,” says Robert Long, a philosopher at the Center for AI Safety, a non-profit research organization in San Francisco, California.

The world’s week on AI safety: powerful computing efforts launched to boost research

Such concerns are no longer just science fiction. Companies such as OpenAI — the firm that created the chatbot ChatGPT — are aiming to develop artificial general intelligence, a deep-learning system that’s trained to perform a wide range of intellectual tasks similar to those humans can do. Some researchers predict that this will be possible in 5–20 years. Even so, the field of consciousness research is “very undersupported”, says Mason. He notes that to his knowledge, there has not been a single grant offer in 2023 to study the topic.

The resulting information gap is outlined in the AMCS’s submission to the UN High-Level Advisory Body on Artificial Intelligence, which launched in October and is scheduled to release a report in mid-2024 on how the world should govern AI technology. The AMCS submission has not been publicly released, but the body confirmed to the AMCS that the group’s comments will be part of its “foundational material” — documents that inform its recommendations about global oversight of AI systems.

Understanding what could make AI conscious, the AMCS researchers say, is necessary to evaluate the implications of conscious AI systems to society, including their possible dangers. Humans would need to assess whether such systems share human values and interests; if not, they could pose a risk to people.

What machines need

But humans should also consider the possible needs of conscious AI systems, the researchers say. Could such systems suffer? If we don’t recognize that an AI system has become conscious, we might inflict pain on a conscious entity, Long says: “We don’t really have a great track record of extending moral consideration to entities that don’t look and act like us.” Wrongly attributing consciousness would also be problematic, he says, because humans should not spend resources to protect systems that don’t need protection.

If AI becomes conscious: here’s how researchers will know

Some of the questions raised by the AMCS to highlight the importance of the consciousness issue are legal: should a conscious AI system be held accountable for a deliberate act of wrongdoing? And should it be granted the same rights as people? The answers might require changes to regulations and laws, the coalition writes.

And then there is the need for scientists to educate others. As companies devise ever-more capable AI systems, the public will wonder whether such systems are conscious, and scientists need to know enough to offer guidance, Mason says.

Other consciousness researchers echo this concern. Philosopher Susan Schneider, the director of the Center for the Future Mind at Florida Atlantic University in Boca Raton, says that chatbots such as ChatGPT seem so human-like in their behaviour that people are justifiably confused by them. Without in-depth analysis from scientists, some people might jump to the conclusion that these systems are conscious, whereas other members of the public might dismiss or even ridicule concerns over AI consciousness.

To mitigate the risks, the AMCS — which includes mathematicians, computer scientists and philosophers — is calling on governments and the private sector to fund more research on AI consciousness. It wouldn’t take much funding to advance the field: despite the limited support to date, relevant work is already underway. For example, Long and 18 other researchers have developed a checklist of criteria to assess whether a system has a high chance of being conscious. The paper1, published in the arXiv preprint repository in August and not yet peer reviewed, derives its criteria from six prominent theories explaining the biological basis of consciousness.

“There’s lots of potential for progress,” Mason says.

doi: https://doi.org/10.1038/d41586-023-04047-6

Leave a Reply