by Ingrid Fadelli , Medical Xpress

MEG recordings are continuously aligned to the deep representation of the images, which can then condition the generation of images at each instant. Credit: Défossez et al.

Recent technological advancements have opened invaluable opportunities for assisting people who are experiencing impairments or disabilities. For instance, they have enabled the creation of tools to support physical rehabilitation, to practice social skills, and to provide daily assistance with specific tasks.

Researchers at Meta AI recently developed a promising and non-invasive method to decode speech from a person’s brain activity, which could allow people who are unable to speak to relay their thoughts via a computer interface. Their proposed method, presented in Nature Machine Intelligence, merges the use of an imaging technique and machine learning.

“After a stroke, or a brain disease, many patients lose their ability to speak,” Jean Remi King, Research Scientist at Meta, told Medical Xpress. “In the past couple of years, major progress has been achieved to develop a neural prosthesis: a device, typically implanted on the motor cortex of the patients, which can be used, through AI, to control a computer interface. This possibility, however, still requires brain surgery, and is thus not without risks.”

In addition to requiring surgical procedures, most proposed approaches for decoding speech rely on implanted electrodes and ensuring the correct functioning of these electrodes for more than a few months is challenging. The key objective of the recent study by King and his colleagues was to explore an alternative non-invasive route for decoding speech representations.

“Instead of using intracranial electrodes, we employ magneto-encephalography,” King explained. “This is an imaging technique relying on a non-invasive device that can take more than a thousand snapshots of brain activity per second. As these brain signals are very difficult to interpret, we train an AI system to decode them into speech segments.”

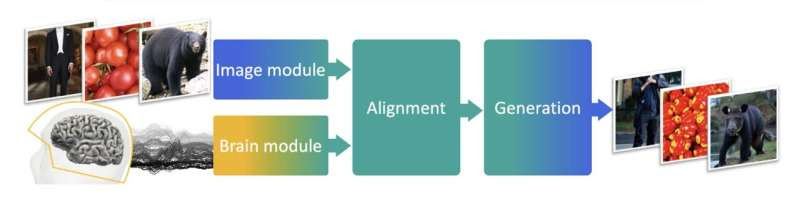

Essentially, King and his colleagues developed an AI system and trained it to analyze magneto-encephalography images, predicting speech from the brain activity recorded in them. Their AI system consists of two key modules, dubbed the ‘brain module’ and the ‘speech module.’

The brain module was trained to extract information from human brain activity recorded using magneto-encephalography. The speech module, on the other hand, identifies the speech representations that are to be decoded.

“The two modules are parameterized such that we can infer, at each instant, what is being heard by the participant,” King said.

The researchers assessed their proposed approach in an initial study involving 175 human participants. These participants were asked to listen to narrated short stories and isolated spoken sentences while their brain activity was recorded using magneto-encephalography, or an alternative technique known as electroencephalography.

The team achieved the best results when analyzing three seconds of magneto-encephalography signals. Specifically, they could decode corresponding speech segments with an average accuracy of up to 41% out of over 1,000 possibilities across participants, yet with some participants they achieved accuracies of up to 80%.

“We were surprised by the decoding performance obtained,” King said. “In most cases, we can retrieve what the participants hear, and if the decoder makes a mistake, it tends to be semantically similar to the target phrase.”

The team’s proposed speech decoding system compared favorably to various baseline approaches, highlighting its potential value for future applications. As it does not require invasive surgical procedures and the use of brain implants, it could also be easier to implement in real-world settings.

“Our team is devoted to fundamental research: to understand how the brain works, and how this functioning can relate and inform AI,” King said. “There is a long road before a practical application, but our hope is that this development could help patients whose communication is limited or prevented by paralysis. The major next step, in this regard, is to move beyond decoding perceived speech, and to decode produced speech.”

The researchers’ AI-based system is still in its early stages of development and will require significant improvements before it can be tested and introduced in clinical settings. Nonetheless, this recent work unveiled the potential of creating less invasive technologies to assist patients who have speech-related impairments.

“Our team is primarily focused on understanding how the brain functions,” King added. “We are thus trying to develop these tools to quantify and understand the similarities between AI and the brain, not only in the context of speech, but also for other modalities, like visual perception.”

More information: Alexandre Défossez et al, Decoding speech perception from non-invasive brain recordings, Nature Machine Intelligence (2023). DOI: 10.1038/s42256-023-00714-5.

Journal information: Nature Machine Intelligence

© 2023 Science X Network

Leave a Reply