Implants and other technologies that decode neural activity can restore people’s abilities to move and speak — and help researchers to understand how the brain works.

By Miryam Naddaf

Scientists have studied how brain–computer interfaces, such as this non-invasive cap, change brain activity. Credit: Silvia Marchesotti

Moving a prosthetic arm. Controlling a speaking avatar. Typing at speed. These are all things that people with paralysis have learnt to do using brain–computer interfaces (BCIs) — implanted devices that are powered by thought alone.

These devices capture neural activity using dozens to hundreds of electrodes embedded in the brain. A decoder system analyses the signals and translates them into commands.

Although the main impetus behind the work is to help restore functions to people with paralysis, the technology also gives researchers a unique way to explore how the human brain is organized, and with greater resolution than most other methods.

Scientists have used these opportunities to learn some basic lessons about the brain. Results are overturning assumptions about brain anatomy, for example, revealing that regions often have much fuzzier boundaries and job descriptions than was thought. Such studies are also helping researchers to work out how BCIs themselves affect the brain and, crucially, how to improve the devices.

“BCIs in humans have given us a chance to record single-neuron activity for a lot of brain areas that nobody’s ever really been able to do in this way,” says Frank Willett, a neuroscientist at Stanford University in California who is working on a BCI for speech.

The devices also allow measurements over much longer time spans than classical tools do, says Edward Chang, a neurosurgeon at the University of California, San Francisco. “BCIs are really pushing the limits, being able to record over not just days, weeks, but months, years at a time,” he says. “So you can study things like learning, you can study things like plasticity, you can learn tasks that require much, much more time to understand.”

Recorded history

The idea that the electrical activity of the human brain could be recorded first gained support 100 years ago. German psychiatrist Hans Berger attached electrodes to the scalp of a 17-year-old boy whose surgery for a brain tumour had left a hole in his skull. When Berger recorded above this opening, he made the first observation of brain oscillations and gave the measurement a name: the EEG (electroencephalogram).

Researchers immediately saw that recording from inside the brain could be even more valuable; Berger and others used surgery to place electrodes on the surface of the cortex to study the brain and diagnose epilepsy. Recording from implanted electrodes is still a standard method for pinpointing where epileptic seizures begin, so that the condition can be treated using surgery.

The brain-reading devices helping paralysed people to move, talk and touch

Then, in the 1970s, researchers began to use signals recorded from further inside animal brains to control external machines, giving rise to the first implanted brain–machine interfaces.

In 2004, Matt Nagle, who was paralysed after a spinal injury, became the first person to receive a long-term invasive BCI system that used multiple electrodes to record activity from individual neurons in his primary motor cortex1. Nagle was able to use his system to open and close a prosthetic hand, and to perform basic tasks with a robotic arm.

Researchers have also used EEG readings — collected using non-invasive electrodes placed on a person’s scalp — to provide signals for BCIs. These have allowed paralysed people to control wheelchairs, robotic arms and gaming devices, but the signals are weaker and the data less reliable than with invasive devices.

So far, about 50 people have had a BCI implanted, and advances in artificial intelligence, decoding tools and hardware have propelled the field forwards.

Electrode arrays, for instance, are becoming more sophisticated. A technology called Neuropixels has not yet been incorporated into a BCI, but is in use for fundamental research. The array of silicon electrodes, each thinner than a human hair, has nearly 1,000 sensors and is capable of detecting electrical signals from a single neuron. Researchers began using Neuropixels arrays in animals seven years ago, and two papers published in the past three months demonstrate their use for questions that can be answered only in humans: how the brain produces and perceives vowel sounds in speech2,3.

Commercial activity is also ramping up. In January, the California-based neurotechnology company Neuralink, founded by entrepreneur Elon Musk, implanted a BCI into a person for the first time. As with other BCIs, the implant can record from individual neurons, but unlike other devices, it has a wireless connection to a computer

And although the main driver is clinical benefit, these windows into the brain have revealed some surprising lessons about its function along the way.

Fuzzy boundaries

Textbooks often describe brain regions as having discrete boundaries or compartments. But BCI recordings suggest that this is not always the case.

Last year, Willett and his team were using a BCI implant for speech generation in a person with motor neuron disease (amyotrophic lateral sclerosis). They expected to find that neurons in a motor control area called the precentral gyrus would be grouped depending on which facial muscles they were tuned to — jaw, larynx, lips or tongue. Instead, neurons with different targets were jumbled up4. “The anatomy was very intermixed,” says Willett.

They also found that Broca’s area, a brain region thought to have a role in speech production and articulation, contained little to no information about words, facial movements or units of sound called phonemes. “It seems surprising that it’s not really involved in speech production per se,” says Willett. Previous findings using other methods had hinted at this more nuanced picture (see, for example, ref. 5).

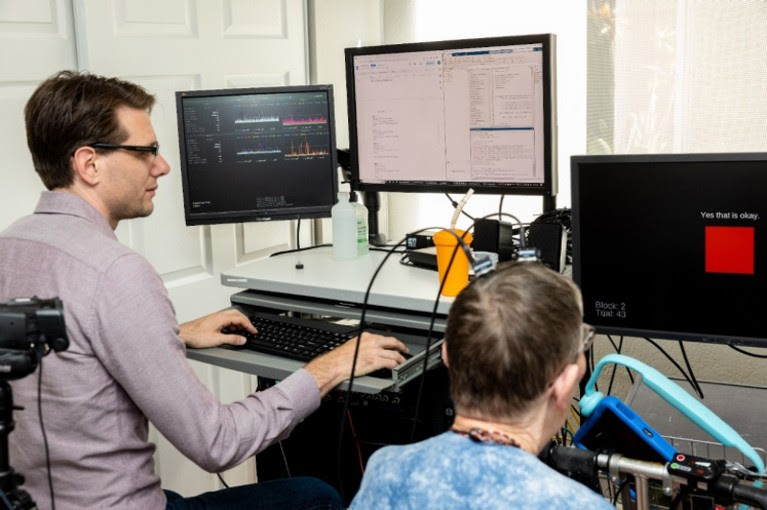

Frank Willett working at a computer with a participant who has a brain implant that interprets her attempts at speech into words on the computer screen

Researcher Frank Willett operates software that translates Pat Bennett’s attempts at speech into words on a screen, through a BCI.Credit: Steve Fisch/Stanford Medicine

In a 2020 paper about motion6, Willett and his colleagues recorded signals in two people with different levels of movement limitation, focusing on an area in the premotor cortex that is responsible for moving the hands. They discovered while using a BCI that the area contains neural codes for all four limbs together, not just for the hands, as previously presumed. This challenges the classical idea that body parts are represented in the brain’s cortex in a topographical map, a theory that has been embedded in medical education for nearly 90 years.

“That’s something that you would only see if you’re able to record single-neuron activity from humans, which is so rare,” says Willett.

Nick Ramsey, a cognitive neuroscientist at University Medical Center Utrecht in the Netherlands, made similar observations when his team implanted a BCI in a part of the motor cortex that corresponds to hand movement7. The motor cortex in one hemisphere of the brain typically controls movements on the opposite side of the body. But when the person attempted to move her right hand, electrodes implanted in the left hemisphere picked up signals for both the right hand and the left hand, a finding that was unexpected, says Ramsey. “We’re trying to find out whether that’s important” for making movements, he says.

The rise of brain-reading technology: what you need to know

Movement relies on a lot of coordination, and brain activity has to synchronize it all, explains Ramsey. Holding out an arm affects balance, for instance, and the brain has to manage those shifts across the body, which could explain the dispersed activity. “There’s a lot of potential in that kind of research that we haven’t thought of before,” he says.

To some scientists, these fuzzy anatomical boundaries are not surprising. Our understanding of the brain is based on average measurements that paint a generalized image of how this complex organ is arranged, says Luca Tonin, an information engineer at the University of Padua in Italy. Individuals are bound to diverge from the average.

“Our brains look different in the details,” says Juan Álvaro Gallego, a neuroscientist at Imperial College London.

To others, findings from such a small number of people should be interpreted with caution. “We need to take everything that we’re learning with a grain of salt and put it in context,” says Chang. “Just because we can record from single neurons doesn’t mean that’s the most important data, or the whole truth.”

Flexible thinking

BCI technology has also helped researchers to reveal neural patterns of how the brain thinks and imagines.

Christian Herff, a computational neuroscientist at Maastricht University, the Netherlands, studies how the brain encodes imagined speech. His team developed a BCI implant capable of generating speech in real time when participants either whisper or imagine speaking without moving their lips or making a sound8. The brain signals picked up by the BCI device in both whispered and imagined speech were similar to those for spoken speech. They share areas and patterns of activity, but are not the same, explains Herff.

That means, he says, that even if someone can’t speak, they could still imagine speech and work a BCI. “This drastically increases the people who could use such a speech BCI on a clinical basis,” says Herff.

The fact that people with paralysis retain the programmes for speech or movement, even when their bodies can no longer respond, helps researchers to draw conclusions about how plastic the brain is — that is, to what extent it can reshape and remodel its neural pathways.

It is known that injury, trauma and disease in the brain can alter the strength of connections between neurons and cause neural circuits to reconfigure or make new connections. For instance, work in rats with spinal cord injuries has shown that brain regions that once controlled now-paralysed limbs can begin to control parts of the body that are still functional9.

But BCI studies have muddied this picture. Jennifer Collinger, a neural engineer at the University of Pittsburgh in Pennsylvania, and her colleagues used an intracortical BCI in a man in his 30s who has a spinal cord injury. He can still move his wrist and elbow, but his fingers are paralysed.

Collinger’s team noticed that the original maps of the hand were preserved in his brain10. When the man attempted to move his fingers, the team saw activity in the motor area, even though his hand did not actually move.

Close up on the head of a patient with a brain implant about to be connected to a computer

Brain–computer interface technology is helping people with paralysis to speak — and providing lessons about brain anatomy.Credit: Mike Kai Chen/The New York Times/Redux/eyevine

“We see the typical organization,” she says. “Whether they have changed at all before or after injury, slightly, we can’t really say.” That doesn’t mean the brain isn’t plastic, Collinger notes. But some brain areas might be more flexible in this regard than others. “For example, plasticity seems to be more limited in sensory cortex compared to motor cortex,” she adds.

In conditions in which the brain is damaged, such as stroke, BCIs can be used alongside other therapeutic interventions to help train a new brain area to take over from a damaged region. In such situations, “people are performing tasks by modulating areas of the brain that originally were not evolved to do so”, says José del R. Millán, a neural engineer at the University of Texas at Austin, who studies how to deploy BCI-induced plasticity in rehabilitation.

In a clinical trial, Millán and his colleagues trained 14 participants with chronic stroke — a long-term condition that begins 6 months or more after a stroke, marked by a slowdown in the recovery process — to use non-invasive BCIs for 6 weeks11.

Abandoned: the human cost of neurotechnology failure

In one group, the BCI was connected to a device that applied electric currents to activate nerves in paralysed muscles, a therapeutic technique known as functional electrical stimulation (FES). Whenever the BCI decoded the participants’ attempts to extend their hands, it stimulated the muscles that control wrist and finger extension. Participants in the control group had the same set-up, but received random electrical stimulation.

Using EEG imaging, Millán’s team found that the participants using BCI-guided FES had increased connectivity between motor areas in the affected brain hemisphere compared with the control group. Over time, the BCI–FES participants became able to extend their hands, and their motor recovery lasted for 6–12 months after the end of the BCI-based rehabilitation therapy.

What BCIs do to the brain

In Millán’s study, the BCI helped to drive learning in the brain. This feedback loop between human and machine is a key element of BCIs, which can allow direct control of brain activity. Participants can learn to adjust their mental focus to improve the decoder’s output in real time.

Whereas most research focuses on optimizing BCI devices and improving their coding performance, “little attention has been paid to what actually happens in the brain when you use the thing”, says Silvia Marchesotti, a neuroengineer at the University of Geneva, Switzerland.

Marchesotti studies how the brain changes when people use a BCI for language generation — looking not just in the regions where the BCI sits, but more widely. Her team found that, when 15 healthy participants were trained to control a non-invasive BCI over 5 days, activity across the brain increased in frequency bands known to be important for language and became more focused over time12.

One possible explanation could be that the brain becomes more efficient at controlling the device and requires fewer neural resources to do the tasks, says Marchesotti.

Studying how the brain behaves during BCI use is an emerging field, and researchers hope it will both benefit the user and improve BCI systems. For example, recording activity across the brain allows scientists to detect whether extra electrodes are needed in other decoding sites to improve accuracy.

Understanding more about brain organization could help to build better decoders and prevent them making errors. In a preprint posted last month13, Ramsey and his colleagues showed that a speech decoder can become confused between a user speaking a sentence and listening to it. They implanted BCIs in the ventral sensorimotor cortex — an area commonly targeted for speech decoding — in five people undergoing epilepsy surgery. They found that patterns of brain activity seen when participants spoke a set of sentences closely resembled those observed when they listened to a recording of the same sentences. This implies that a speech decoder might not be able to differentiate between heard and spoken words when trying to generate speech.

The scope of current BCI research is still limited, with trials recruiting a very small number of participants and focusing mainly on brain regions involved in motor function.

“You have at least tenfold as many researchers working on BCIs as you have patients using BCIs,” says Herff.

Researchers value the rare chances to record directly from human neurons, but they are driven by the need to restore function and meet medical needs. “This is neurosurgery,” says Collinger. “It’s not to be taken lightly.”

To Chang, the field naturally operates as a blend of discovery and clinical application. “It’s hard for me to even imagine what our research would be like if we were just doing basic discovery or only doing the BCI work alone,” he says. “It seems that both really are critical for moving the field forwards.”

Nature 626, 706-708 (2024)

doi: https://doi.org/10.1038/d41586-024-00481-2

Leave a Reply