by Tilburg University

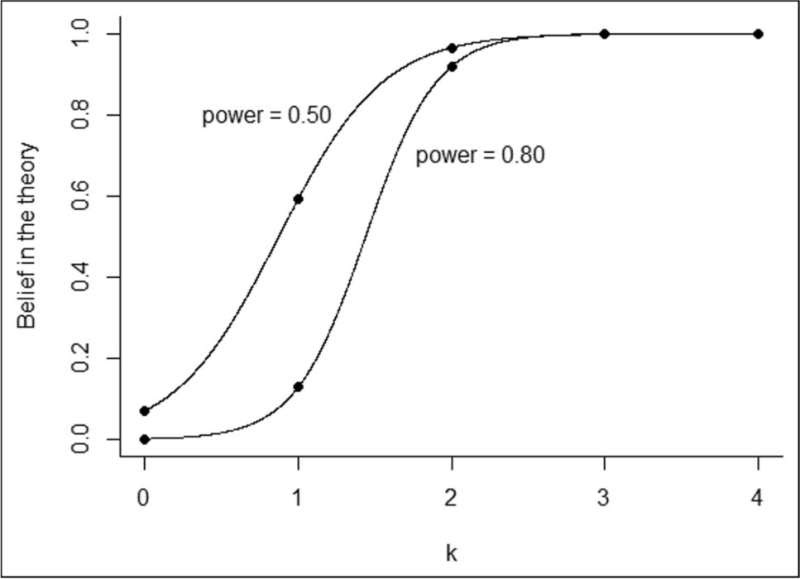

Belief in the theory based on Bayesian inference, as a function of statistical power (0.50 and 0.80) and the number of statistically significant results, k, given prior probabilities equal to 0.5. The beliefs in the theory for k = 0,1,2,3,4 are [0.071, 0.593, 0.965, 0.998, 0.999] and [0.002, 0.013, 0.919, 0.999, 1.000] for a statistical power of 0.50 and 0.80, respectively. Credit: Psychonomic Bulletin & Review (2023). DOI: 10.3758/s13423-022-02235-5

When do research results provide sufficient evidence to support a hypothesis? It turns out that psychology researchers are usually unable to judge this properly. They often demand more evidence than is necessary. As a result, valid research sometimes ends up in the desk drawer and therapies or interventions with potential never reach the treatment room. Also, rigorous assessment can cause researchers to steer results—consciously or unconsciously—toward a desired effect. This backfires on the reliability of research.

This finding emerges from research by Olmo van den Akker and colleagues at the Meta-research Center of the Department of Methodology and Statistics at Tilburg University.

A research paper in psychology often consists of multiple studies based on which it is concluded whether an effect can be said to be an effective medication or psychological treatment, for example. Van den Akker’s research suggests that research findings already strongly indicate an effect if only two out of four studies done show a statistically significant result.

However, after surveying more than 1,800 psychology researchers from around the world, only 2% were found to realize this. Most of these researchers require that every single study within an article shows a result in the same direction before one is convinced that a particular effect exists. This is reflected by published articles in psychology, almost all of which consist solely of studies with statistically significant results.

This situation leads to several problems, Van den Akker argues. In science, the evidence for a particular hypothesis is often represented by a p-value. If a p-value is less than 0.05, that is usually considered sufficient evidence for a particular relationship between variables. The problem is that a phenomenon called “p-hacking” occurs, in which researchers steer for a “p-value” below that 0.05 after reviewing the data.

The evidence is then artificially manipulated instead of researchers letting the data speak for itself. Partly because of p-hacking, there is currently a replication crisis in psychological research, with many cases failing to find the same effect when repeating a study. Moreover, the strict attitude toward scientific evidence can cause valid solutions to all sorts of psychological problems to remain unjustly on the shelf.

Part of the solution, according to Van den Akker, lies in increasing the knowledge of statistics among scientists. This can be done, for example, by including scenarios from scientific practice in statistics education. Such scenarios can make future scientists more aware of the rules of thumb they use in evaluating their own research and that of others.

The paper is published in the journal Psychonomic Bulletin & Review.

Leave a Reply